Event information

Projects include developing antifouling zwitterionic materials, teaching algebra through animation, and analyzing atmospheric distortions with infrared laser transmissions, and so much more.

Hosted by McMaster Engineering, The Centre for Career Growth and Experience and the McMaster Society for Engineering Research (MacSER), this free event brings together students, faculty, staff, industry and the public to celebrate curiosity, creativity and discovery.

Oh, and did we mention free ice cream*?

Details:

- Tuesday, August 19 from 11 a.m. to 3 p.m. – Drop-in style

- JHE field (outside of the John Hodgins Engineering building on McMaster University’s campus – look for the tent)

- Paid parking on campus – more information on Parking Services

*Ice cream while supplies last. We will do our best to ensure a variety of flavours but we can’t guarantee we’ll be able to accommodate all dietary needs.

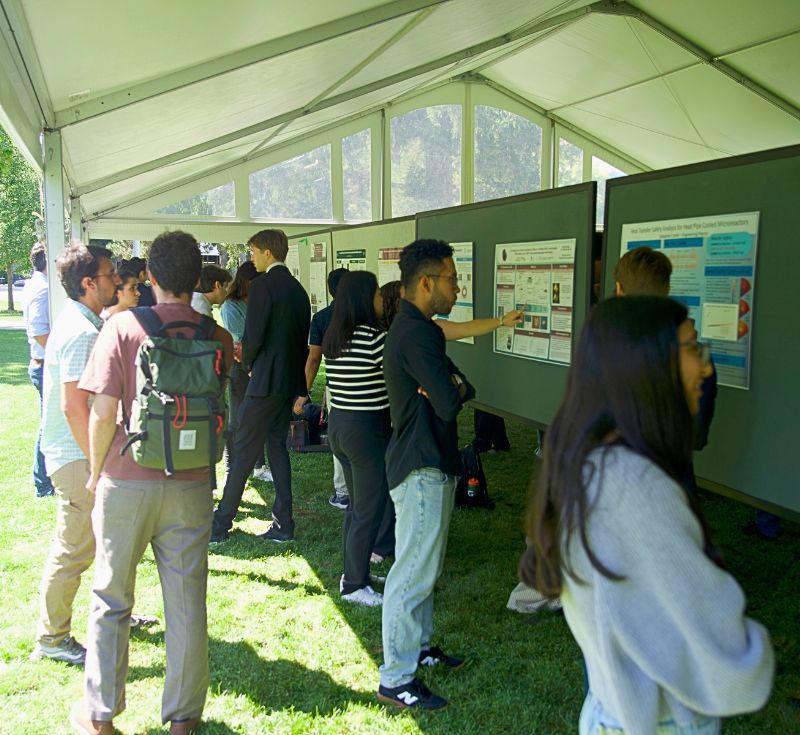

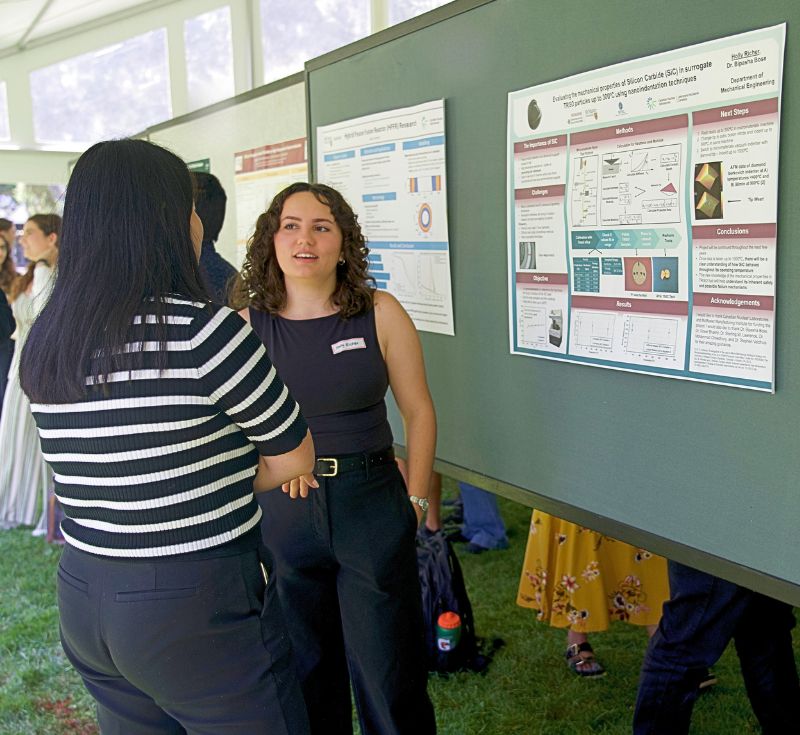

Photos from 2024 Showcase:

Dendritic fibrous nanosilica (DFNS) offers significant potential for biomedical applications due to their favourable physiochemical properties, including good biocompatibility, high specific surface area, tunable pore size and volume, and ease of surface modification. In our lab, we have synthesized amine-functionalized DFNS particles ~2000 nm in diameter. By also exploring carboxylic acid functionalizations, we can further enhance the loading and retention of cationic drugs into these particles, making DFNS well-suited for tunable pH-sensitive drug delivery. This will allow for either crosslinking within POEGMA-Ald/POEGMA-Hzd hydrogels or preferential loading of antibiotics and protein therapeutics for controlled release under varying pH conditions. Material properties such as rheological behavior, degradation, stiffness, and drug loading and release will be characterized and optimized. The resultant hydrogels have a wide range of potential applications, particularly in controlled drug release for wound dressing and healing applications.

ABSTRACT COMING SOON

Quaternary ammonium (QA) is a widely used anti-infective compound found in many surface disinfectants. Its antibacterial activity comes from its positive charge and long alkyl chain, which induce death in bacterial cells by disrupting the cell membrane through direct contact. Recent work from the Hoare lab involved incorporating QA into poly(oligo ethylene glycol) methacrylate (POEGMA) polymers to synthesize antibacterial materials for applications in wound dressings and surface coatings. However, the antibacterial potency of QA, measured by its minimum inhibitory concentration (MIC), tends to diminish once it is polymerized, likely due to steric hindrance and reduced mobility of the QA groups within the bulky POEGMA structure. To address this, this project aimed to synthesize POEGMA-QA polymers with QA groups tethered farther from the polymer backbone using extended OEGMA chains, minimizing interference from the surrounding structure. First, the cytocompatibility of the monomer was assessed against C2C12 mouse myoblast cells, and the tethered polymer showed improved viability to the original. In terms of antibacterial properties, the tethered monomer and polymer were tested against gram-positive and gram-negative bacteria and compared in their efficacy to the original, non-tethered, QA. The newly synthesized monomer demonstrated 8-fold higher antibacterial potency against Pseudomonas aeruginosa compared to the original monomer, and both demonstrated similar antibacterial activity against Staphylococcus aureus. However, upon polymerization, the tethered polymer showed a 32-fold decrease in potency against S. aureus relative to the untethered polymer, although this may be attributed to contamination of the polymer. In order to improve the antibacterial properties of the tethered polymer, future work for this project will focus on optimizing sterilization techniques and synthesizing new formulations varying concentrations of QA. Ultimately, this work will contribute to the development of more effective and cytocompatible antibacterial materials for use in wound care and surface disinfectants.

Authors: Alina Asad, Evelyn Cudmore, Mya Sharma, Todd Hoare

Paper cups are widely perceived as environmentally friendly; however, their common polyethylene (plastic) coating hinders biodegradability and complicates recycling. This project aims to address this issue by eliminating the plastic layer and replacing it with a biodegradable, cellulose-based coating. By utilizing cellulose, a naturally occurring and renewable polymer, the proposed design ensures full biodegradability without compromising the cup’s functionality. This innovation offers a sustainable alternative to conventional paper cups, reducing landfill waste and environmental pollution.

Authors: Angel La, Akhilesh Pal, Roozbeh Mafi, Kushal Panchal

| Drug delivery vehicles are often hindered by low loading capacities, insufficient penetration, low targeting efficiency, and difficult scalability. The use of these complex vehicles in agriculture specifically remains untapped due to the combination of these factors alongside the added necessity for low cost. The Hoare Lab previously developed a low-cost biodegradable starch nanoparticle (SNP)-based Pickering emulsion which can hold hydrophobic drugs in its oil core. Penetration of the emulsion into the chloroplasts of Nicotiana benthamiana leaves was visualized with confocal microscopy. Antibacterial activity of the emulsion was assessed in vitro and demonstrated complete kill against Pseudomonas syringae. Cytotoxicity of the leading emulsion was assessed on 3T3 and C2C12 cell lines. Average cell viability remained above 70% after treatment with 1% emulsion or less. In planta studies were conducted to further assess the efficacy of the antibacterial action and penetration of the emulsion. Preliminary results suggest effective in planta antibacterial activity of the emulsion, however future experimental procedures should refine the procedure. These recent experimental efforts demonstrated the capacity of this starch-based drug delivery system to stably contain hydrophobic antibacterial compounds and demonstrate a strong foundation by which they can help defend plants against unwanted infections. Authors: Asha Bouwmeester, Cameron Macdonald, Dr. Todd Hoare |

The electrochemical reduction of carbon dioxide (CO2R) offers an approach to reducing the emissions of industrial processes through the utilization and conversion of CO2 into various products, such as carbon monoxide (CO)—a key feedstock for synthesis gas . State-of-the-art CO2R systems are commonly performed in alkaline and neutral conditions to steer the reaction kinetics towards CO2R over the parasitic hydrogen evolution reaction (HER). However, these conditions lead to carbonate formation and salt precipitation, disrupting CO2 gas diffusion and product formation. Here, we thought to tackle this challenge by performing CO2R in modified acidic conditions to impede carbon loss into carbonates, as well as salt formation. Nevertheless, with a pure acid electrolyte (0.05 M H2SO4), the HER selectivity increases due to the increased concentration of hydrogen ions. Studies show alkali cations provided in neutral solutions (i.e. Li+, K+, Cs+) are necessary to supresses HER and stabilize CO2R intermediates. Electrolyte engineering with alkali cations was investigated in this research to demonstrate the effectiveness in acidic systems. Therefore, Cs+ has successfully proven improved CO selectivity (>85%) while minimally reintroducing salt formation.

Authors: Emilee Brown, Rem Jalab, Drew Higgins

As the world moves away from fossil fuels that contribute to global warming, the use of biofuels is rising. The rapid expansion of biodiesel has resulted in an oversupply of glycerol byproduct (10-16% w/w), causing industries to burn it as waste and release more greenhouse gases. It is crucial to find a cost-effective and efficient way to convert glycerol into its many byproducts that are high in demand and have use in various industries. We demonstrate a pulsed electrochemical system that converts glycerol into its value-added products with high selectivity and stability. Glycerol oxidation was carried out in a 3M KOH + 1M glycerol solution for 1.5 hours using a bifunctional nickel-gold catalyst with varying pulse conditions. Compared to pulse conditions of 1 V vs RHE and 1.25 V vs RHE, adding a surface cleaning pulse potential (0.55 V vs RHE) reduced activity decay from 42% to 19%. Oxidation with a Au electrode favoured glycolic acid (68%) at 1.25 V vs RHE, while the NiF/Au catalyst increased selectivity of lactic acid (19%) and glyceric acid (26%). Periodic pulses allow for removal of AuOx and refresh Ni(OH)₂ active sites, allowing for sustained and tunable production of high-value acids. Here we demonstrate a durable, low-energy route to high-value acids from glycerol waste.

Authors: Hermione French, Mahdis Nankali

Utilizing corn stover for electricity using biogas generation is a promising method for rural communities. This research focuses on optimizing the biogas generation through a twin screw extrusion pretreatment. Corn stover samples with different moisture content were extruded at varying temperatures, speeds, and feed rates. Torque and exit temperatures were recorded during extrusion. After the extrusion moisture, water retention, specific surface area and particle size were all measured. Microscopy was also conducted. Lower screw speed, higher moisture and a higher feed rate correlated with a smaller particle size. Higher screw speed and lower moisture showed improved water retention. Feed rate displayed no correlation with water retention. Low moisture content and high screw speed samples showed higher specific surface area. These results suggest that samples that had too much shear force were going through an irreversible drying process called hornification which would prevent water from penetrating the fibers and enzymes from reaching active sites. This research suggests that in the future more testing should be conducted to minimise particle size while avoiding hornification of the material.

Authors: Isaac Khan, Michael Thompson, Heera Marway

Photocatalysis presents a sustainable method for environmental remediation and hydrogen production by utilizing solar energy to drive otherwise unfavorable reactions. Titanium dioxide (TiO₂) is a well-established photocatalyst due to its chemical stability and oxidative power. However, traditional slurry-based TiO₂ systems face limitations including poor recyclability, difficulty in recovery, and reduced operational lifetime. To address these challenges, this study focuses on fabricating floating TiO₂-polypropylene (PP) composites through thermal adhesion. TiO₂ nanoparticles (P25) are mechanically embedded onto PP particles by heating the polymer near its melting point, enabling adhesion without chemical binders. Composites are produced in three mass ratios of PP to TiO₂: 9:1, 2:1, and 2:3. These materials are characterized through scanning electron microscopy (SEM), thermogravimetric analysis (TGA), floating percentage measurements, and dye degradation assays using methyl orange (MO) as a model contaminant. The most promising composition, based on preliminary robustness and photocatalytic performance, is intended for further evaluation in hydrogen evolution reaction (HER) testing. This work aims to develop a simple, scalable, and reusable floating photocatalyst platform for use in water purification and green hydrogen generation.

Authors: Jasper Hopkins, Alibek Kurbanov, Julie Liu, Stuart Linley

When assembling biocompatible drug delivery vehicles, challenges such as maintaining controlled drug release and increasing vehicle structural integrity after bodily integration must be considered. The aim of this project is to construct a POEGMA-based interpenetrating network hydrogel, containing covalent and physical crosslinks, for reduced degradation and ultrasound-triggered pulsatile drug release. Of the two interpenetrating hydrogel networks, one would be ultrasound-labile, degrading under ultrasound application to release a portion of the stored drug. The other network would be ultrasound-stable to maintain suitable mechanical properties over longer periods of time for repeated drug delivery. The current double network is composed of POEGMA-Ald and POEGMA-Hzd (covalent, ultrasound-stable) with the addition of sodium alginate and calcium chloride (ionic, ultrasound-labile). Preliminary swelling and degradation testing of the double network hydrogels have shown increased structural integrity, while exploration of mechanical and rheological properties have contributed to further optimization of the hydrogel composition. Initial drug release testing finds higher rates of release at times of ultrasound application, though further composition alterations must be made to optimize drug-release timing during the application of ultrasound. The goal of this project is to develop a less invasive means of administering repeated, personalized medication doses for improved patient quality of life.

Authors: Julia Sulug, Kayla Baker, Todd Hoare

Glycosylation of recombinant therapeutic proteins is a critical quality attribute that varies greatly during production. Monitoring glycosylation during production is essential for ensuring product quality; however, many current analytical techniques are time- and labour-intensive, costly, and performed only after production is complete. We explore biolayer interferometry (BLI), a label-free optical technique, as a real-time process analytical technology (PAT) for glycosylation analysis. Using lectin-based biosensors with high specificity towards certain mannose-containing glycans (GlyM), we assess the glycosylation status of a protein-based SARS-CoV-2 vaccine antigen by comparing native and enzymatically deglycosylated samples. Deglycosylation was achieved using PNGase F, with reaction conditions optimized for maximal cleavage efficiency. BLI measurements with GlyM biosensors and SDS-PAGE gels confirmed the removal of targeted glycans, validating the method’s sensitivity and potential utility in bioprocess monitoring. These results support the application of lectin-based BLI for real-time glycosylation tracking in therapeutic protein production.

Authors: Juliana Brank, Nardine Abd Elmaseh, Avian Yuen, Trina Racine, Kyla Sask, David Latulippe

In recent decades, extensive use of polymer resins and fibers has led to an accumulation of waste polymers in landfills and the natural environment that threatens human health, promotes ecosystem degradation, and contributes to climate change. Of these, polypropylene (PP) is one of the most highly produced thermoplastics, with a global production of 56 million metric tons (tonnes) in 2018, projected to reach 88 million tonnes by 2026. Due to their high melt flow index and carbon-rich composition, thermoplastic wastes are an attractive material for recycling. To mitigate the detrimental environmental effects of waste polymers, it is desirable to identify opportunities to upcycle these materials into useful products, fuels, and chemicals. Here, we propose a floating heterogeneous waste polymer composite with embedded photocatalyst for sunlight-driven hydrogen production over wastewater, coined solar reforming. Floating PP with graphitic carbon nitride (g-C3N4, CNx) composites were synthesized while evaluating the effects of material composition and synthesis temperature on photocatalytic performance measured through methyl orange dye degradation and hydrogen production during solar reforming. It was hypothesized that decreasing the ratio of PP to g-C3N4 would increase its photocatalytic activity, while an optimal synthesis temperature would maximize activity by maximizing the photocatalyst surface availability. Similarly, it was theorized that the degree of photocatalytic activity was reflective of the amount of hydrogen produced during solar reforming. Findings based on these hypotheses would establish efficient synthesis of floating PP/CNx composites for future research into their solar reforming capabilities.

Authors: Julie Liu, Alibek Kurbanov, Jasper Hopkins, Stuart Linley

Biofouling is a significant challenge when designing materials for biomedical applications, as protein and cell adsorption can hinder medical device function and induce unwanted immune responses. Poly(oligo(ethylene glycol) methacrylate) (pOEGMA) and zwitterionic-based materials are non-antigenic and have been used in the field for their tunable mechanics and high antifouling capabilities, respectively. Copolymer formulations of OEGMA and zwitterionic monomers have been explored in the past, though further advancements are required to enhance their functionality and effectiveness. This study explores the synthesis of two novel pOEGMA-based zwitterionic monomers, Sulfobetaine-OEGMA (SO) and OEGMA-Sulfobetaine (OS). These new materials aim to leverage both the tunability of pOEGMA and the enhanced antifouling nature of zwitterions with a single monomer unit that contains both functionalities. The SO monomer is synthesized via a 3-step pathway involving two substitution reactions, followed by a sultone ring-opening to form a quaternary ammonium and sulfo group. The OS monomer is also synthesized via a 3-step pathway, first with the formation of a succinic acid derivative of OEGMA, followed by a Steglich esterification and a sultone ring-opening reaction. Monomer products and precursors were characterized using ¹H NMR, and gel permeation chromatography (GPC). Once large-scale amounts of the SO and OS monomers are obtained, they will be further polymerized with functional aldehyde and hydrazide groups to form pSOA30 and pSOH30, as well as pOSA30 and pOSH30. These polymer systems will be combined to form hydrogels, which will then be characterized for their gelation time, rheological properties, swelling and degradation profiles. In addition, hydrogels formed from pOEGMA, pSulfobetaine and pOEGMA-pSulfobetaine copolymers will be tested to compare with the new pSO and pOS materials. This work provides a foundational step in developing a novel class of antifouling materials for biomedical applications, with the potential to improve performance and longevity of implantable devices and biosensors by minimizing biofouling-induced complications.

Authors: Kaci Kuang, Nahieli Perciado Rivera, Norma Garza Flores, Todd Hoare

| The Haber process, used to convert nitrogen to ammonia for agricultural and commercial use, is causing widespread nitrate accumulation in water systems. Nitrate accumulation is harmful to aquatic life, and unsafe for human consumption. In mitigating nitrate accumulation, and reducing the reliance in the Haber process, the electrochemical conversion of nitrates to ammonia is being explored. Among the metal-based electrocatalysts being studied, Cu shows promise for its high selectively towards ammonia. Although Cu shows high performance, its merit as an electrocatalyst suffers from its low stability. Recently, studies for CO2RR and ORR have found that adding Ag to Cu reduces its mobility. These studies further found that the hydrogen evolution reaction (HER) was suppressed in the CuAg bimetallic system. In this work, we explored CuAg as an electrocatalyst for nitrate reduction to ammonia reactions. We prepared and optimized a bimetallic CuAg system through galvanic exchange and compared its performance for ammonia production against a pure Cu catalyst. Here, we found an overall increase in ammonia faradaic efficiency at potentials between -0.2 and -0.6 V vs RHE. We postulate that the higher performance for the NO3RR system with the bimetallic foil is due to its HER suppression, and decreased Cu mobility. Authors: Leah Pare, Navid Noor, Drew Higgins |

| Canola meal is a protein rich byproduct of Canada’s highest earning crop –canola, represents not only an underutilized source of plant-based protein, but with the involvement of the twin screw extruder it has the potential to open new markets and economic opportunities for Canadian agriculture. This study explores twin-screw extrusion as a value-added process to permeate maximum vesticles and extract proteins in addition to downstream filtering. However, protein entrapment within complex fiber structures and the presence of anti-nutritional factors limit both yield and functionality. Authors: Manpreet Mahi |

| Municipal solid waste (MSW) and related low-carbon fuel sources may be used as a feedstock for syngas production. The steelmaking industry accounts for about 8% of human carbon emissions annually. Syngas can be used to directly reduce iron ore, lowering the carbon intensity of steel if the syngas is produced from low-carbon fuel sources. This work focuses on the design of a gasification-based syngas production method of MSW for steelmaking. The gasification process was modeled using Aspen Plus and tailored to the steelmaking use case which generally favours a higher CO:H2 ratio compared to typical syngas production methods. A carbon-capture process was modeled in ProMax to account for the stringent limits of CO2 in DRI shaft furnaces and to further reduce the carbon footprint of the process. Results indicate that the syngas produced from gasification of MSW and its derivatives produces a syngas with a CO:H2 ratio ranging from 1:2 to 2:1, depending on the feedstock. The higher ratios would be appropriate for the steel use case. Further research will conduct a eco-techno-economic analysis to determine if MSW gasification is a promising technology to economically decarbonize the steel industry. Authors: Max Tomlinson, Mashroor Rahman, Yumna Ahmed, Giancarlo Dalle Ave, James Rose |

| Oceans are natural carbon sinks, regulating the amount of anthropogenic carbon dioxide (CO₂) emissions in the air. However, rising emissions are disturbing the oceans capacity to absorb CO₂ resulting in ocean acidification. To mitigate this and to support the removal of CO₂ in the atmosphere, ocean-based carbon capture offers a promising solution. This study explores a method for extracting CO₂ from electrochemically treated seawater, contributing to the development of ocean-based carbon capture technologies. To simulate the acidic conditions produced from seawater electrolysis, solutions of 0.2 M HCl and 4 mM KHCO₃ were continuously mixed in a PTFE bead-packed reactor. The generated CO₂ from the reaction was captured and stored in a gas chromatography bag for analysis. Throughout the experiments, parameters such as the flow rate and the overall system setup were varied to reduce oxygen contamination and increase CO₂ extraction efficiency. System modifications included sealing the reactor and adding a vacuum pump to the waste container. Initial trials showed high levels of oxygen alongside CO₂ indicating leakages. Sealing and improving the overall setup contributed to a drop in oxygen and an increase in CO₂ extraction efficiency to 64%. This work supports the advancement of scalable CO₂ capture methods that can feed into downstream utilization processes, such as electrochemical conversion to valuable products, thus contributing to a circular carbon economy and negative-emissions technologies. Authors: Natalie Peluchon-De La Garza, Mahtab Masouminia, Ashkan Irannezhad, Drew Higgins |

| Biotherapeutics, including engineered viruses, represent a rapidly growing sector of medical advancement in oncology and vaccine therapy. However, water usage in production of these biologics is over 100-fold of that for small molecule drugs such as Aspirin, making biomanufacturing a resource-intensive process [1]. Downstream chromatography, a process that removes harmful impurities, represents 62% of the overall water usage in typical facilities [2]. As biotherapeutics become more widely used, manufacturing modes that conserve water will become increasingly important for sustainable production. One key buffer type required for chromatography are caustic cleaning agents, used for clean-in-place (CIP) procedures between chromatography cycles. Buffer recycling of these caustic agents had been previously unexplored within literature, despite their increased hazardous nature and disposal risks in comparison to salt-based buffers. Our group’s previous work has shown that purified DNA has a similar ionic elution profile to viruses, making it a suitable surrogate feed for preliminary research into recycling opportunities. Sartobind Q AEX membranes were used on the ÄKTA avant 150 system to perform 10 sequential bind-and-elute experiments. Three conditions were tested to evaluate the impact on membrane binding capacity: zero-recycling, partial recycling, and full recycling of the caustic agent (1 M NaOH). Across the three degrees of recycling, a comparable UV chromatogram was observed in all experiments with no significant change in binding capacity. A 90% reduction in caustic agent volume and a 13-15% reduction in overall buffer consumption was achieved. Overall, this work demonstrated a method for significant water reduction in the downstream biotherapeutic purification train. [1] A. Jungbauer and N. Walch, “Buffer recycling in downstream processing of biologics,” Current Opinion in Chemical Engineering, vol. 10, pp. 1–7, Nov. 2015, doi: 10.1016/j.coche.2015.06.001. [2] K. Budzinski et al., “Introduction of a process mass intensity metric for biologics,” New Biotechnology, vol. 49, pp. 37–42, Mar. 2019, doi: 10.1016/j.nbt.2018.07.005. Authors: Nora Rassenberg, Claire Velikonja, Kaitlin Campagna, Cindy Shu, Brandon Corbett, Giancarlo Dalle Ave, David Latulippe |

Canola protein isolate (CPI) is a promising biopolymer for the development of sustainable materials, owing to its inherent hydrophobicity and potential for functional surface coatings. This study focuses on the development of biodegradable, antimicrobial coatings by enhancing CPI films through the grafting of essential oil-derived monomers via a Michael addition reaction. Initial efforts centred on optimizing CPI film formation using various plasticizers and essential oil sources. The resulting films were evaluated for mechanical properties, hydrophobicity (via water contact angle measurements), and antimicrobial activity. A notable increase in contact angle compared to control films confirmed enhanced hydrophobicity, supporting the potential of CPI-based coatings in sustainable and functional material applications. The antimicrobial efficacy of these films against E. coli and S. aureus is currently under investigation. These findings underscore the potential of CPI films for sustainable packaging applications, particularly in the agricultural sector.

Authors: Olivia Pino, Ava Ettehadolhagh, Todd Hoare

| Advances in tissue regeneration and transplant therapies have been limited due to conventional cell-sheet harvesting methods, which often apply harsh enzymes that may cause damage to the extracellular matrix and cell-cell junctions. To overcome these issues, enzyme-free cell harvesting technologies are being explored. This project investigates the application of thermoresponsive poly(oligoethylene glycol methacrylate) (POEGMA)-based coatings as an alternative to enzymatic cell sheet harvesting, aiming to develop membranes that can both support cell growth and facilitate thermally triggered delamination. POEGMA polymers are promising for this application due to their inherent cytocompatibility, low protein adsorption, and thermoresponsive properties. The coatings are fabricated using the POEGMA polymers through a simple dip-coating procedure, forming thin-film hydrogels onto the surface of cellulose acetate membranes. The POEGMA hydrogels support the growth of weakly adhesive cells by providing a hydrated, anti-fouling surface, while the porous cellulose acetate membrane beneath ensures the transport of nutrients to sustain cell viability. GFP-labelled 3T3 fibroblast cells were used to monitor adhesion and delamination behaviour across different coating formulations by incubating cells on the coated surfaces and then using the thermoresponsive nature of the polymers to trigger cell delamination at 4°C. Through confocal microscopy, the coatings demonstrate their ability to support cell adhesion and proliferation. However, while some delamination of the cells has been observed at 4°C, it was inconsistent, and many of the cells remained adhered to the membrane. In order to optimize cell delamination, future studies will examine alternative coating methods and materials. Ultimately, this work establishes the potential for a scalable and customizable platform for engineering tissue constructs, in an attempt to offer a gentle, enzyme-free alternative to conventional delamination methods, and holds promise for broad application in wound healing, grafting, and tissue engineering. Authors: Prabhnoor Kaur, Mya Sharma, Todd Hoare |

| Wound dressings often adhere to the wound bed due to lack of consistent moisture, resulting in wound damage during dressing changes. The aim of this project is to develop and examine components of a wound dressing that can provide antibacterial activity and be seamlessly removed without causing wound damage in order to accelerate healing and prevent wound pain. Modified guar gum was hydrolyzed to achieve a hydrogel to leverage its high-moisture properties. Nano emulsions were prepared with probe sonication and characterized using Dynamic Light Scattering (DLS) and Zeta potential measurement to optimize nanoparticle size and stability over 30 days, thus optimizing for antibacterial activity. Two nanofiber formulations were investigated with Zeta potential measurement to compare stability of zwitterionic and deacylated nanofibers for structural integrity. Nano silica particles were functionalized and loaded with the antibacterial agent thymol, with release kinetics assessed over 24 hours using high-performance liquid chromatography (HPLC). Under Lab2Market, 40+ interviews with wound care experts were conducted to validate a market need. Nano emulsion studies showed that higher sonication amplitudes and shorter sonication times produced smaller nanoparticles. Aminated nano silica particles achieved 41% thymol release in 24 hours, though the observed initial burst and plateau deviated from typical release curves seen in literature, indicating the need for further investigation. Interview insights validated that infection control and low adherence are important design priorities for an innovative smart wound dressing to help accelerate healing and relieve pain in chronic wound patients. Authors: Syed Umair, Parizad Katila, Hugo Lopes, Todd Hoare |

Poly[oligo(ethylene glycol) methyl ether methacrylate] (POEGMA) hydrogels are extensively researched in biomedical applications for their tunable properties and biocompatibility. This research focuses on optimizing and characterizing hydrogels from a novel type of POEGMA polymer, tethered hydrazide-functionalized POEGMA. This material was prepared by directly tethering hydrazide functional groups to the OEGMA brushes situated along the polymer backbone. Through this synthetic approach, the tunability from the OEGMA brushes and the reactivity from the hydrazide group were simultaneously enhanced, thus allowing the material to self-gel in aqueous environments. Optimized hydrogel formulations demonstrated rheological and mechanical properties that were particularly suitable for 3D printing applications. Leveraging the material’s self-gelling properties, the compatibility of the material with electrospinning, another fabrication technique, was also explored. Thin mats of uniform, continuous fibers with minimal beadings were achieved when electrospun with the tethered POEGMA. Anti-fouling properties of this material are currently of interest for further investigation, given its long brush structure and self-gelling characteristics.

Authors: Tracy Nguyen, Nahieli Preciado Rivera, Maham Munir, Norma Garza Flores, Todd Hoare

The internal structure of asphalt pavement is crucial for its mechanical performance and long-term durability. Understanding these microstructural characteristics is essential for optimizing pavement design.

This study utilized X-ray Computed Tomography (XCT) to investigate the internal microstructural features of asphalt mixtures, specifically focusing on air void distribution, aggregate packing, and binder continuity. High-resolution XCT scans of compacted asphalt specimens were analyzed to quantitatively and qualitatively assess air void content, aggregate orientation, and binder distribution.

Our findings demonstrate that XCT provides valuable insights into how mixture design and compaction quality impact the internal structure of asphalt. This research highlights XCT as a powerful tool for microstructural analysis, contributing to a better understanding of asphalt performance. Future work will explore failure modes, failure location and the effect of alternative filler materials to enhance pavement sustainability.

Structural fire engineering is increasingly adopting performance-based and probabilistic approaches to account for uncertainties associated with fire events and construction. The FIRESURE module enables uncertainty quantification in structural fire scenarios, supporting the development of risk-informed, fire-resilient designs. However, access to such tools is often limited by steep technical learning curves and confusing interfaces.

This project focuses on the development of a graphical user interface (GUI) for FIRESURE, with the goal of making advanced fire modelling and uncertainty analysis more accessible to industry professionals. The interface is being designed to simplify the user experience by allowing users to define fire scenarios, input probabilistic parameters (e.g., room size, ventilation, material properties), and visualize simulation outputs with a probability of failure/fuel load density curve; all without requiring in-depth coding knowledge. Built with an emphasis on usability and functionality, the GUI connects directly to the underlying FIRESURE engine, which was created by my supervisor.

By translating probabilistic modelling into a GUI, this work aims to bridge the gap between advanced fire research tools and practical engineering applications. Ultimately, the GUI enhances the impact of FIRESURE by promoting uncertainty-based fire design methods to a broader audience, allowing industry professionals to better assess and manage structural fire risks in any context.

| Hydrogen has emerged as a promising energy alternative in global decarbonization efforts. To enable widespread hydrogen use, it must be stored securely in large commercial volumes for extended periods. Underground Hydrogen Storage (UHS) addresses these challenges by allowing significant volumes of hydrogen to be safely stored in deep geological formations such as depleted oil and gas reservoirs, saline aquifers, and salt caverns. These formations are highly stable and have the capacity needed to create a reliable hydrogen supply for large-scale use. However, for UHS to be feasible, microbial impacts on these reservoirs must be thoroughly assessed. Microorganisms that naturally reside in these reservoirs can create challenges and affect reservoir quality. Commonly found microorganisms include sulfate-reducing bacteria (SRB), methanogens, and acetogens. While SRB and methanogens have been greatly studied, the impacts of acetogens remain largely unexplored. Acetogenic biochemical processes can induce mineral dissolution and alter reservoir properties, particularly porosity and permeability. To investigate these effects and characterize reservoir rock, we employ X-ray Diffraction (XRD), Fourier Transform Infrared Spectroscopy (FTIR), and X-ray Computed Tomography (XCT). These analyses allow the monitoring of mineralogical and morphological changes to reservoir samples. Fluid flow experiments are used to quantify saturation, permeability, and porosity. Additionally, aging experiments are conducted using formation fluid containing acetic acid, a metabolite of acetogens. Aging in this fluid induces biogeochemical transformations, allowing the quantification of mineral dissolution and porosity and permeability changes over time. Preliminary results indicate that aging in acetic acid causes mineral dissolution and resultant increases in pH and conductivity. By filling this gap of knowledge on acetogens, this work can contribute important insights on maintaining reservoir integrity and protecting stored hydrogen. These findings are crucial for ensuring UHS remains secure and efficient in the long-term, and establishing its role as a cornerstone in the transition to renewable energy systems. |

I evaluated North Mountain Basalt’s suitability for subsurface CO₂ storage by characterizing its pore network from the micrometer down to the nanometer scale. Three-dimensional image volumes were captured using X-ray computed tomography (XCT) for larger pores and focused ion beam–scanning electron microscopy (FIB-SEM) for nanopores. Raw image stacks were pre-processed in Dragonfly and Fiji, then segmented using thresholding and machine learning to classify pore and mineral structures.

From the segmented volumes, I calculated bulk porosity and applied pore-network analysis to extract pore-size distributions. XCT imaging captured and plotted the larger pores in the rock, while FIB-SEM revealed the nanopores. Further processing could quantify accessible versus inaccessible pore networks to better predict the basalt’s characteristics for CO₂ injection and storage.

These findings indicate that North Mountain Basalt has strong potential for carbon storage due to its large pore volume and surface area. This research represents an early step toward preparing the formation for future CO₂ injection.

| PyGeochemCalc (PyGCC) is an open-source Python package that performs various thermodynamic calculations from ambient to deep Earth conditions. PyGCC was developed to address the limited availability of accessible geochemical data and computational tools within the geochemical community. This project expands PyGCC’s functionality by enabling the generation of PHREEQC-compatible thermodynamic databases, which will enhance geochemical modelling capabilities across a variety of terrestrial planetary environments. The integration involved implementing data extraction from PHREEQC-formatted databases into PyGCC’s existing data structures and developing the first iteration of PHREEQC database-writing functionality. The runtime of database generation for all formats will be reduced by approximately 50% by reusing a data structure from the reading function in the writing function. Preliminary testing confirms successful reading of PHREEQC-formatted data within PyGCC. Further validation is required to ensure accuracy across multiple source databases and to verify full compatibility with PHREEQC. Once complete, this integration will streamline thermodynamic database preparation, improve computational efficiency, and expand the range of geochemical simulations supported by PyGCC. |

Digital twins play an important role in systems in which computing is to be off-loaded to a cloud infrastructure, e.g., due to resource constraints of the physical system. Pertinent examples are autonomous vehicles which require substantial computing power to perform advanced tasks, such as AI-enabled object detection. However, the architectural preferences, technological choices, task off-loading strategies, and safety mechanisms in automotive digital twins are currently not well understood. To investigate such issues, in this work, we develop a digital twin of an autonomous scale vehicle, capable of AI-enabled object detection, path computation, and the subsequent control of the vehicle along the computed path. We rely on state-of-the-art technology, including the lightweight ZeroMQ communication middleware and the YOLO convolutional neural network-based real-time object detection system. This project contributes to the rapidly growing field of safety-critical digital twins, and serves as a case study for multiple research projects in the host lab.

McMaster Start Coding and STaBL Foundation have introduced over 30,000 children to coding. One of our tools, MusicCreator, allows children to drag and drop notes to create a measure as a way of learning how to programmatically create melodies in ElmMusic, our music library. This is great for children who can use drag-and-drop, but is not accessible to children with visual impairments. MusicCreator 2.0 will be a more powerful version of MusicCreator, capable of creating multi-voice scores, and doing it without any visual interaction. The music, the code, and the state of the editor are all voiced using speech synthesis (standard on every modern web browser), and all input actions are keyboard-based. Our design incorporates lessons learned by analyzing existing music creation and accessible coding tools, as well as recent academic research. MusicCreator 2.0 is still being prototyped, but when complete will be the most accessible coding tool for children, which also happens to double as a nifty groove creator and orchestral score editor.

Mobile health applications often compromise user privacy through opaque data collection practices, relying on complex privacy policies that users rarely read or understand. This project addresses the disconnect between users and applications by developing an AI-driven system that detects privacy violations and provides real-time, easy-to-understand explanations during app usage.

A proof-of-concept sleep-tracking app was created with embedded UI elements that update dynamically to reflect privacy violations. The system uses an LLM to generate explanations based on Canada’s PIPEDA legislation, application privacy policies, and user consent configurations. These explanations are integrated directly into the interface through tooltips and interactive elements, eliminating the need to navigate complex documentation. It is essential that these explanations remain readable, logically consistent, and concise enough to display effectively on a mobile device.

The experimental framework examined the relationship between readability and logical consistency in AI-generated explanations, and how this balance changes with response length. Five lengths (15–50 words) and eight privacy violation scenarios were tested. Logical consistency was measured using NLI, while readability was assessed with Flesch-Kincaid grade level and word frequency analysis. Initial results suggest weak positive correlations between NLI and readability scores, between length and NLI, and between length and readability. Segmenting by length showed that length also affected the readability–consistency correlation.

Future work will include real-user testing to assess whether AI-generated explanations and embedded UI elements genuinely improve privacy transparency. This research represents cutting-edge work at the intersection of large language models, user privacy, and human-computer interaction.

| As artificial intelligence (AI) begins to take root in every industry, particularly those where human life is at stake (e.g., automotive, healthcare), the question of safety arises. Users must have confidence in AI components, especially in safety-critical systems. This confidence depends on a software engineer’s ability to justify every step of their development and deployment pipeline. Under the supervision of Professor Sébastien Mosser, whose research focuses on software safety, composition, and assurance cases, my work started with creating AI pipelines that could be transparently justified using formal reasoning tools. As I continued, I had the task of testing three different AI models for a specific purpose to determine which one performed the best. This brought my attention to the lack of standardized testing for AI models in general. The need arose to benchmark AI models consistently across formats and frameworks as a method of building safe software pipelines. This led me to develop BenchmarkEngine, a simple framework for benchmarking machine learning models with a plugin-based architecture. The system separates models, datasets, and metrics into moveable components, enabling cross-format benchmarking. Tests measured accuracy, inference time, model size, and memory usage, as well as comparisons between original and quantized models. The framework successfully produced performance evaluations for models from multiple ecosystems, displaying how format and compression affect both efficiency and accuracy. This standardized evaluation supports assurance cases by providing objective, reproducible evidence for model selection. Although the engine still has lots of room for improvement, the benchmarking inside a modular pipeline not only improves fairness and efficiency in AI model comparison but also contributes to the broader goal of software safety. By integrating consistent performance evaluation into the development process, the reliability of AI components in safety-critical and production environments is strengthened. |

| Students often struggle with internalizing the concepts of algebra taught in earlier grades and consequently struggle with higher level math, particularly when they are required to learn about functions and calculus. Existing literature suggests that algebra expertise is predictive of positive educational outcomes in general, making algebra a pivotal subject on which to focus educational efforts. In particular, our goal is to enhance our existing online tools which allow students to create animations using algebraic code. We find that teaching periodic functions to students through static graphs is unintuitive. Students struggle to connect polynomial functions as they are written to graphs and animations. We used design thinking to structure our research. Although animation is really motivating for children, putting pressure on mentors to help them create animations, it was hard to go beyond giving them animation recipes to follow. We wanted them to understand the ingredients, and build their own animation recipes, in the same way that they build their own static graphics from basic shapes. Through a process of iterative prototyping and gathering feedback from coding mentors, we developed a tool following established UI principles. This tool shows students how to break complex animations into basic building blocks (scaling and translation in time and space), and how to translate between algebraic and function-composition representations. By giving students hands-on experience in generating creative animations and graphics, we make the learning process easier and more engaging. |

Generating scientific computing software (SCS) creates the opportunity for improved traceability, understandability, and deduplication in all software artifacts. From software requirements specification documents to code that is well documented with a variety of test cases, the Drasil framework, written in Haskell, aims to implement SCS generation. Using a stable knowledge base, Drasil is able to take “chunks”, structured modules of knowledge, and generate the many software artifacts needed to solve different scientific computing problems. This research worked to improve the scalability of Drasil’s knowledge database by implementing a new structure which does not restrict chunk types, rather than having to add a separate map for every new Chunk type. Doing so required deduplicating all of the existing UIDs in the database, as it would cause data to be overwritten in the new implementation .The process for fixing within-table duplicates involved determining where each instance was inserted, analyzing the type of duplicate problem (e.g., same UID with different data, chunk extraction, or exact duplicate), and then resolving the issue. For across-table duplicates, the main culprits were two chunk types: QuantityDict, and ConceptChunk. QuantityDict was replaced with DefinedQuantityDict through an analysis of all QuantityDict constructors and their usage, and with DefinedQuantityDict now holding a definition similar to a ConceptChunk, ConceptChunks with corresponding DefinedQuantityDicts were no longer needed. This eliminated all duplicates related to symbol-concept extraction. The work also revealed many problems and insights within Drasil, which will contribute to future improvements. The new, improved chunk database implementation has vastly improved the scalability of future case studies generated with Drasil and has also set up Drasil to be greatly enhanced in the future by uncovering many issues that can now be addressed.

| There are many factors, including safety, comfort, and efficiency, that influence the “best” trajectory for a vehicle. However, not all the factors have equal importance. In particular, safety must be ensured before any other factor is considered. One way to account for this is to integrate Responsibility-Sensitive Safety (RSS), a mathematical model formalizing collision avoidance, with the Rulebooks framework. In Rulebooks, realizations (world trajectories) are ranked based on a pre-ordered set of rules called a rulebook. With safety (RSS) as the top-priority rule, realizations that do not violate RSS will always be ranked higher than those that do violate RSS. The lower-priority rules can then be used to differentiate the realizations based on other desired properties without compromising the RSS safety ranking ensured by the top-priority rule. As a proof of concept, a rulebook of two rules is proposed: the top-priority RSS rule (currently accounting for longitudinal same direction and lateral safe distances only) and a secondary rule of progress to destination. With this rulebook, a realization will be primarily ranked on adherence to RSS, and then secondarily ranked on its progression towards the destination. The RSS rule uses an evaluation tool such as Signal Temporal Logic (STL) or a function to determine whether a realization follows RSS or not. The second-priority rule uses a function that calculates a realization’s progress towards a desired destination. In addition to creating this rulebook, simulations will be used to rank potential realizations for different scenarios. These simulations can empirically evaluate whether implementing the RSS rule as the top-priority holds the same guarantees as RSS (assuming there is at least one input realization that guarantees RSS) while also ensuring that lower-priority rules are considered. |

McMaster Start Coding and STaBL Foundation have introduced over 30,000 children to coding. One of our tools, MusicCreator, allows children to drag and drop notes to create a measure as a way of learning how to programmatically create melodies in ElmMusic, our music library. This is great for children who can use drag-and-drop, but is not accessible to children with visual impairments. MusicCreator 2.0 will be a more powerful version of MusicCreator, capable of creating multi-voice scores, and doing it without any visual interaction. The music, the code, and the state of the editor are all voiced using speech synthesis (standard on every modern web browser), and all input actions are keyboard-based. Our design incorporates lessons learned by analyzing existing music creation and accessible coding tools, as well as recent academic research. MusicCreator 2.0 is still being prototyped, but when complete will be the most accessible coding tool for children, which also happens to double as a nifty groove creator and orchestral score editor.

McMaster Start Coding, in collaboration with the STaBL Foundation, has introduced coding to over 30,000 students through a range of in-person and online workshops. However, measuring student understanding—particularly in virtual settings—remains a persistent challenge. To address this, we developed Beanstalk 2.0, an enhanced version of our original educational quiz app. Inspired by platforms like Kahoot, Beanstalk 2.0 offers a dynamic, visually engaging environment designed to assess student comprehension in real-time while maintaining a fun and competitive atmosphere.

To evaluate the effectiveness of Beanstalk 2.0, we are integrating the app into both our Summer and in-class programming workshops. We collect and analyze quantitative data such as student response accuracy and changes in correctness over time, enabling us to track learning progress. Additionally, we gather qualitative feedback from workshop mentors to assess the app’s impact on their ability to teach and engage students.

Preliminary observations suggest that Beanstalk 2.0 not only enhances student participation but also provides instructors with valuable insights into student learning. By combining data analytics with user feedback, we aim to iteratively refine the app and establish it as a reliable tool for real-time formative assessment in coding education.

Student engagement during the learning process can impact student academic achievement, depth of retention, and course satisfaction. There are many strategies instructors use to keep their students engaged, which includes providing personalized feedback and guidance in multiple components of the course. However, it can be challenging to do so in large classes, such as computer sciences courses that continue to grow in size. A strategy to guide students learning at scale, is by motivating students to self-explain after learning a new concept to identify their learning gaps. While self-explanations have been found to improve student performance, participation tends to decrease over time, necessitating strategies to maintain student motivation and drive student engagement. In this study, we evaluate methods to personalize students’ self-explanation processes to increase student engagement and improve student learning experiences: fixed guiding prompts vs. LLM personalized prompts after explaining to identify learning gaps.

Students expressed increased satisfaction, perceived usefulness, and spent longer self-explaining by using the LLMs. We also found LLMs to be an effective tool in evaluating the quality of self-explanations, demonstrating correlations between quality scores and student performance. To further explore factors influencing personalized learning and engagement, we examined the role of language background in shaping student learning experience. Non-native students often face language-related challenges when expressing their understanding in academic settings. We investigate how native and non-native students’ experiences on weekly self-explanations influence their engagement in learning and course performance. Although there was no significant difference in comfort using voice, most students preferred reflecting in English, while a few valued the option to use another language. Notably, non-native English speakers appeared to engage more thoughtfully with weekly questions despite similar performance outcomes. This suggests that language barriers may not have posed a major challenge for non-native students in using voice reflections.

Existing approaches to robot visual navigation can be broadly categorized into classical modular pipelines, structured learning-based methods and deep reinforcement learning (DRL). Classical pipelines combine exploration, visual SLAM, and path planning using hand-designed modules. Structured learning-based approaches retain this modularity but instead learn components such as spatial memory in the form of a topological or metric map. While both offer interpretability and modular reasoning, they lack end-to-end optimization. In contrast, DRL enables end-to-end learning from raw image data but incurs high computational costs from reliance on convolutional operators and the use of over-parameterized models to infer mapping, localization and path planning. In this work, we propose Tangled Program Graphs (TPG) as a framework for learning modular, interpretable, and end-to-end trainable policies for visual navigation. TPG evolves an acyclic policy graph from register-based modules that coordinate through a bid-based mechanism. Evolutionary selective pressure automatically adapts the graph topology and module allocation based on task complexity, eliminating the need for manual architecture tuning. Empirical results in Gazebo, a visually realistic physics-based simulator, show that our proposed method (1) matches state-of-the-art deep reinforcement learning methods at a fraction of the computational cost, (2) generalizes across goals and environments, and (3) transfers robustly to a real robot under compute-constrained conditions using domain-randomized simulation training.

Student engagement during the learning process can impact student academic achievement, depth of retention, and course satisfaction [RSFI]. There are many strategies instructors use to keep their students engaged, which includes providing personalized feedback and guidance in multiple components of the course. However, it can be challenging to do so in large classes, such as computer sciences courses that continue to grow in size. A strategy to guide students learning at scale, is by motivating students to self-explain after learning a new concept to identify their learning gaps. While self-explanations have been found to improve student performance, participation tends to decrease over time, necessitating strategies to maintain student motivation and drive student engagement. In this study, we evaluate methods to personalize students’ self-explanation processes to increase student engagement and improve student learning experiences: fixed guiding prompts vs. LLM personalized prompts after explaining to identify learning gaps.

Students expressed increased satisfaction, perceived usefulness, and spent longer self-explaining by using the LLMs. We also found LLMs to be an effective tool in evaluating the quality of self-explanations, demonstrating correlations between quality scores and student performance. To further explore factors influencing personalized learning and engagement, we examined the role of language background in shaping student learning experience. Non-native students often face language-related challenges when expressing their understanding in academic settings. We investigate how native and non-native students’ experiences on weekly self-explanations influence their engagement in learning and course performance. Although there was no significant difference in comfort using voice, most students preferred reflecting in English, while a few valued the option to use another language. Notably, non-native English speakers appeared to engage more thoughtfully with weekly questions despite similar performance outcomes. This suggests that language barriers may not have posed a major challenge for non-native students in using voice reflections.

| Magnetic actuation systems can occupy space in the surgical field, hindering the efficacy of a surgery by delaying rapid intervention and instrument swapping. To mitigate challenges, a platform with up to three degrees of freedom to control an Under the Table Magnetic Actuation System (UTMAS) is proposed. Inspired by Cartesian 3D printer designs, the system enables scalable XY (and optional Z) motion across a 60×90 cm workspace, supporting loads up to 20 kg. The mechanical framework incorporates T-slotted aluminum rails, GT2 belt-driven actuation, and stepper motors for repeatable motion. The system is controlled through a PyQt5 graphical user interface (GUI) that communicates with an Arduino through non-blocking serial communication. Real-time motion feedback is visualized using a multi-view GUI display. The platform supports both manual control (via push buttons or WASD keyboard input) and automated routines, allowing users to define movement coordinates and update speeds. The Arduino handles low-level motor control, including homing sequences, limit switch handling, and position tracking. Validation experiments confirm accurate position and speed control under varying conditions, demonstrating system reliability. The automated platform for UTMAS offers a user-friendly solution for precise magnetic actuation beneath the surgical table, providing unobstructed operating fields. It is adaptable to surgical, experimental, and research settings in which coordinated microrobot navigation is required. Future work involves including a Z axis for three degrees of freedom and position encoders for enhanced location precision. |

| Students often struggle with internalizing the concepts of algebra taught in earlier grades and consequently struggle with higher level math, particularly when they are required to learn about functions and calculus. Existing literature suggests that algebra expertise is predictive of positive educational outcomes in general, making algebra a pivotal subject on which to focus educational efforts. In particular, our goal is to enhance our existing online tools which allow students to create animations using algebraic code. We find that teaching periodic functions to students through static graphs is unintuitive. Students struggle to connect polynomial functions as they are written to graphs and animations. We used design thinking to structure our research. Although animation is really motivating for children, putting pressure on mentors to help them create animations, it was hard to go beyond giving them animation recipes to follow. We wanted them to understand the ingredients, and build their own animation recipes, in the same way that they build their own static graphics from basic shapes. Through a process of iterative prototyping and gathering feedback from coding mentors, we developed a tool following established UI principles. This tool shows students how to break complex animations into basic building blocks (scaling and translation in time and space), and how to translate between algebraic and function-composition representations. By giving students hands-on experience in generating creative animations and graphics, we make the learning process easier and more engaging. |

Photoplethysmography (PPG) is a non-invasive technology used to measure changes in blood volume. This technology is mostly used for determining heart rate and blood pressure in smart watches and has recently been studied for its potential to give information on blood glucose levels (BGL). In this study, the VitalDB dataset was used to provide PPG signals from 6388 patients spanning several hours and the corresponding BGL, which were used to extract 36 statistical and spectral features and 60 heart rate variability (HRV) features. Multiple machine learning models including Support Vector Regressor (SVM) with RBF Kernel, (Random Forest Regressor (RFR), eXtreme Gradient Boosting (XGBoost), Histogram-Based Gradient Boosting (HBGB), Light Gradient Boosting Machine (LightGBM), and Categorical Boosting (CatBoost) were then employed to train and predict BGL from the extracted features. The aim is to investigate the effects of various feature subsets on these models and optimize for the best preforming feature subset and model.

Depression is one of the most common mental health disorders, affecting a significant portion of the globe whilst accounting for a majority of suicides. Depression, clinically diagnosed as MDD (Major Depressive Disorder), can be correlated to the relationship between a plethora of biological markers. In this review, novel advancements in the last five years for electrochemical biosensors targeting five critical biomarkers associated with depression are discussed. The main highlight is the performance of various sensors, and the different materials used for their enhancement, including metals, carbon, polymer, nanoparticles, to detect low levels of the five-biomarker selected from nanomolar to picomolar. Sensing mechanisms such as cyclic voltammetry (CV) and electrochemical impedance spectroscopy (EIS) were discussed. Despite having an adequate limit of detection for sensing biomarkers, many of these sensors are yet to be applied in living environments with interferant and various external factors. Areas of improvement for practical use such as wearable, point of care (PoC) are identified as well as possible solutions. This review focuses on centralizing the information regarding the enhancement of biosensor performance through novel materials and AI integration. Thus, this review offers a significant contribution to the future improvement of MDD biosensors for user applications and in the understanding of the disease.

Data structures such as tensors, graphs, and meshes are widely used in AI and scientific applications, but they often require large amounts of storage and computation. Compression techniques can reduce data movement and the number of floating-point operations (FLOPs) by exploiting the sparsity or redundancy of these data structures. However, compression techniques also introduce challenges for software design and algorithm development, such as irregular code patterns and trade-offs between accuracy and memory usage. This research explores new software solutions and algorithms that can efficiently operate on compressed data structures.

This project focuses on making large, useful algorithms and models runnable on resource-constrained devices. We selected YOLOv11n and exported it to NCNN framework to enable efficient, CPU-only inference on a Raspberry Pi 5. The RC car uses the Pi camera for detections and fuses them with ultrasonic sensor data via a C++ obstacle-avoidance pipeline; a Python loop sends final speed and steering to the FMU to control the car. Benchmarks show usable speeds on Pi 5, with a clear trade-off: smaller input images increase FPS but can reduce accuracy. We are targeting reliable corridor navigation—keeping the car centered and avoiding obstacles in real time. Next, we will design a low-latency autonomy stack for drones, selecting and optimizing algorithms to ensure stable, real-time flight on similarly constrained hardware.

The demand for fast and scalable matrix kernels is higher than ever, driven by the rapid growth in fields such as artificial intelligence, bioinformatics, and climate modeling. Without engineers with a background in low-level programming models such as CUDA, the performance tuning of sparse kernels becomes difficult and time-consuming. The parallel execution of a matrix kernel must ensure that dependencies of a calculation have already been computed beforehand. To ensure proper order of execution, current parallelization transformations commonly rely on affine array accesses, where array indices are statically predictable. Sparsity in matrices greatly reduces the number of dependencies, offering more opportunities for parallelization. However, it also introduces irregular array access patterns that complicate analysis. For example, sparse kernels commonly work with sparse matrix formats that use index arrays such as compressed sparse column (CSC) and row (CSR), which leads to indirect array accesses, a form of irregular accesses. Thus, existing compilers often leave such code unoptimized, leaving performance gains on the table. This work proposes a sync-free, runtime-based transformation that automates loop parallelization where there are loop-carried dependencies in sparse kernels. It focuses on sparse triangle solvers applied to CSC and CSR matrices in order to develop a framework that can be generalized to other sparse kernels with similar properties. The foundation of this transformation is a runtime component which tracks the reads and writes of the kernel, which are then used to create a dependency DAG. This DAG is used in a source-to-source transformation that generates a Triton kernel. The Triton kernel uses a sync-free approach to parallelism so that threads can execute as soon as dependencies are met. The future of this work is to research properties that allow for parallelism, and generalize the approach to work for a wide array of sparse matrix kernels.

Major Depressive Disorder (MDD) is a prevalent mental health condition affecting approximately 3.8% of the global population. Its progression is influenced by varying concentrations of specific biomarkers, including TNF-α, IL-6, VEGF, BDNF, and insulin. Electrochemical biosensors have emerged as promising tools for rapid, cost-effective detection of these biomarkers in biofluids such as saliva, sweat, and plasma, offering potential for point-of-care (PoC) applications. This study reviewed recent advancements (within the past five years) in electrochemical biosensors for detecting MDD-related biomarkers, focusing on materials, detection techniques, and design strategies that enhance sensitivity, selectivity, and applicability in real-world settings. Articles were evaluated for sensor materials, sensing methods, and performance parameters, including limit of detection (LOD) and linear range (LR). Commonly used materials included carbon-based compounds, polymers, and nanoparticles, all enabling detection of biomarkers at low concentrations, ranging from nanomolar to picomolar levels. Gold was the most widely used material due to its excellent conductivity, achieving LODs in the picomolar range. Sensing techniques such as cyclic voltammetry (CV) and electrochemical impedance spectroscopy (EIS) were frequently applied. Among these, fast fourier transform admittance voltammetry (FFTAV) stood out as a particularly effective method, enabling simpler sensor designs without the need for electroactive probes. Notable innovations include a dual-pathway biosensor capable of simultaneously detecting BDNF and IL-6 in serum using Pb-CDs and Fe-CDs, respectively, and a gold nanoparticle-modified glassy carbon electrode that achieved femtogram-per-milliliter detection of VEGF in spiked human serum. Recent developments in electrochemical biosensors show strong potential for specific multi-analyte detection of MDD biomarkers. However, challenges remain, particularly in establishing biofluid-specific reference ranges and validating sensor performance in complex, real-world environments. Future directions may include the development of wearable biosensors for real-time monitoring, integration with artificial intelligence for personalized data analysis, and improved sensor stability for clinical applications.

Large language models like LLaMA-7B rely on very large floating-point weight and attention matrices, which create significant storage and deployment costs. This study examines whether simple, statistics-based preprocessing can improve lossy compression of those matrices by reducing their effective entropy. Weight and attention matrices were partitioned into square submatrices at multiple scales (block sizes of 512, 256, 128, 64, 32, and 16). For each scale, individual submatrices were measured for entropy and grouped based on similarity in their statistical profiles; they were then reordered so that blocks with comparable entropy and value characteristics are placed near one another. This reordering based on entropy and similarity is intended to make hidden local patterns more visible to the compression algorithms. Compression was applied to both the original and transformed matrices using FPZIP (lossy, precision-controlled) and Zstandard, with primary evaluation metrics including uncompressed size and compression ratio. Initial results show little to no improvement in overall compression, but a consistent pattern is observed: smaller block sizes exhibit greater variance in entropy, and the grouping and reordering approach produces stronger effects at those finer scales. The methodology is designed to be flexible in how submatrices are chosen or constructed. Future work will expand the selection and assembly strategies for blocks, add additional measurements such as reconstruction error, local correlation, and statistical divergence to better capture similarity and structure, and improve entropy estimation. Further testing will apply the preprocessing pipeline to a wider variety of matrix types and systematically evaluate how these transformations affect the balance between compression efficiency and preservation of important matrix structure. The ultimate aim is to establish reliable, data-driven preprocessing workflows that consistently enhance the effectiveness of existing lossy compression tools on large floating-point data.

Diabetes patients frequently need to monitor their blood glucose level. Current methods are invasive and painful, creating a need for noninvasive wearable devices that can measure blood glucose. Recent research has combined multiple different sensing modalities and used machine learning to estimate blood glucose concentration based on the results. Our research aims to perform this sensor fusion using an analog neural network (ANN). We used Altium Designer software to design a PCB that would obtain a PPG waveform using the MAX30102 module, measure tissue bioimpedance at 50-100 kHz using the AD5933 network analyzer, and measure tissue reflectance of 845nm and 1040nm NIR light using a photodiode. The proposed procedure is to train a simple neural network on volunteer data collected by this device, along with blood glucose data collected by a glucometer. The weights and biases of the trained neural network would be used to configure an ANN. The accuracy, latency, and energy consumption of the ANN would be compared to that of its software counterpart in further trials. Accurate, efficient performance from the ANN would demonstrate the utility of analog computing in medical devices, and could set a precedent for the use of ANNs in many other biomedical applications in the future.

Human Activity Recognition (HAR) is the classification of human activities based on sensor data, often using accelerometers or gyroscopes. HAR has many applications in healthcare, particularly for outpatient monitoring in individuals with chronic conditions such as mental health disorders or diabetes. The data can provide clinicians insight into behavioural patterns and early indicators of relapse or emergency revisits. This project aims to develop a cost-effective, lightweight, and real-time HAR system using a Raspberry Pi 4B and an accelerometer. The wearable system continuously records tri-axial acceleration and classifies activities into walking, walking up, walking down, sitting, standing, and lying using artificial intelligence. Three models were used for activity recognition: a seven-feature support vector machine (SVM), a custom feed-forward neural network, and a contrastive learning model developed by Eldele et al. from Nanyang Technological University. All models were trained on the UCI HAR Dataset, a publicly available accelerometer dataset with thirty subjects performing six actions. A custom dataset was collected using the developed HAR system and used to fine-tune the neural network and contrastive learning model. The fine tuned neural network and constructive learning model achieved 91.21% and 90.68% accuracy, respectively, on intra-subject testing. The SVM achieved 78.75% on the UCI HAR dataset but failed to generalize to the custom dataset, achieving 16.76%. Results show that performance decreases with inter-subject testing and orientation changes, suggesting that the models struggle to generalize across different domains. This project has demonstrated the feasibility of an affordable, lightweight, and real-time HAR system to classify different human activities, however it showcases the obstacles in orientation and domain changes. Future improvements involve expanding the custom dataset to improve generalization and incorporating cloud based storage for easier data access and analysis.

Simultaneous Localization and Mapping (SLAM) is a fundamental capability for autonomous navigation in unknown environments. However, conventional SLAM often require significant computational resources, making them unsuitable for micro aerial vehicles (MAVs) with strict power and weight constraints. This research explores a lightweight SLAM solution designed for deployment on resource-constrained drones, focusing on achieving real-time performance without external computation support.

The system adopts the front-end feature-based SLAM and the back-end selective keyframe pose graph optimization. It is tested, by NanoSLAM team (Vlad Niculescu et al), using simulated flight data and real-time experiments on a Crazyflie 2.1 quadcopter equipped with four monocular cameras and an onboard processor.

Preliminary results demonstrate that the system is capable of constructing consistent local maps in indoor environments while maintaining real-time operation at over 15 Hz. The pose estimation accuracy remains within an acceptable range compared to ground truth.

This research highlights the feasibility of deploying SLAM algorithms in extremely constrained environments, providing insights into the trade-offs between accuracy, resource usage, and system responsiveness.

Investigating the surface and microstructure of nuclear reactor materials gives insight into the failure modes and longevity of reactor components. Positron Annihilation Spectroscopy (PAS) is a novel material characterization technique that uses the positively charged anti-particle of the electron to investigate the microstructure of materials. By controlling the velocity of the positron as it enters the material, different depths into the material surface can be probed. When the wavefunction of a positron and an electron overlap they annihilate to release two gamma photons of 511 keV. The defects and vacancies in the material lattice act as potential wells where the positrons become “trapped” and annihilate. The annihilation can occur with outer or inner shell electrons of the host material which produces a doppler broadened spectrum. By comparing the 511 keV photopeak and the wings of the Gaussian distribution versus implantation depth, the mechanisms of oxide and hydride formation can be better understood. In this blind study, four surface treated samples of zircaloy-4 have undergone doppler-broadened PAS to better understand the measurement technique and it’s potential in predicting and understanding failure modes of reactor materials.